Common applications of Classification:

- Spam filtering: Identifying spam emails in an inbox

- Fraud detection: Detecting fraudulent transactions in financial data

- Medical diagnosis: Diagnosing diseases based on patient data

- Image recognition: Recognizing objects or scene in images

- Recommender system: Recommending products or services to users based on their past behaviors.

Types of Classification

Binary Classification: is the problem of classifying instances into one of two categories. The data we want to classify belongs exclusively to one of those classes. For example, we could label patients as non-diabetic or diabetic. The class prediction is made by determining the probability for each class as a value between 0 (impossible) and 1 (certain). A threshold value, often 0.5, is used to determine the predicted class.

Multiclass Classification: is the problem of classifying instances into one of three or more categories. The data we want to classify belongs exclusively to one of those classes, e.g. to classify if an object on an image is red, green or blue. Multiclass classification can be thought of as a combination of multiple binary classifiers. There are two ways in which you approach the problem:

- square or not

- circle or not

- triangle or not

- hexagon or not

Multi-label Classification: is the problem of classifying instances into two or more classes in which the data we want to classify may belong to none or multiple classes (or all) at the same time, e.g. to classify which traffic signs are contained on an image. Neural network models can be configured to support multi-label classification and can perform well, depending on the specifics of the classification task.

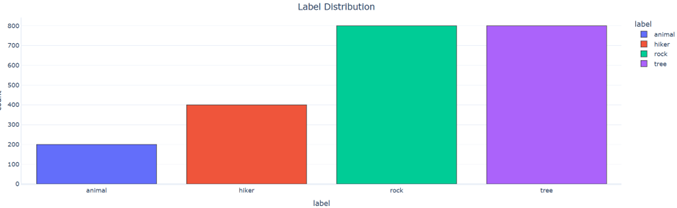

Imbalanced Classification: An imbalanced classification problem is an example of a classification problem where the distribution of examples across the known classes is biased or skewed. The distribution can vary from a slight bias to a severe imbalance where there is one example in the minority class for hundreds, thousands, or millions of examples in the majority class or classes.

Go back to Supervised Learning