In the context of artificial neural networks (ANNs), MNIST refers to the MNIST dataset, which is often used as a benchmark for training and testing ANN models, particularly for image classification tasks.

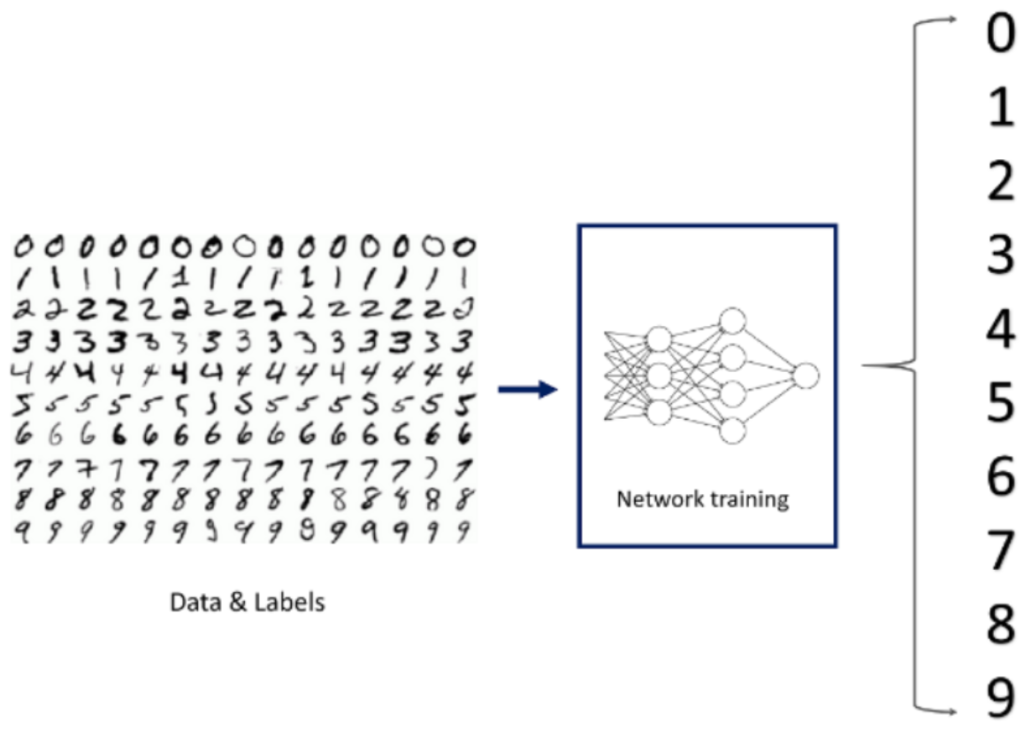

The MNIST dataset consists of a large collection of grayscale images of handwritten digits from 0 to 9. Each image is a 28×28 pixel grid, representing a single digit. The dataset is split into a training set of 60,000 images and a test set of 10,000 images. Each image is labeled with the corresponding digit it represents.

ANNs, especially convolutional neural networks (CNNs), are commonly used to classify MNIST digits. The typical approach involves designing a neural network architecture suitable for image classification, training the network on the training set, and then evaluating its performance on the test set.

Here’s a basic outline of how ANNs are used with the MNIST dataset:

- Input: Each image in the MNIST dataset is flattened into a 1D vector of 784 (28×28) values, which serves as the input to the neural network.

- Architecture: The neural network architecture is designed to process these input vectors and learn to classify them into one of the ten possible classes (digits 0 through 9). This architecture often includes one or more hidden layers, typically implemented with densely connected layers (also known as fully connected layers).

- Training: The neural network is trained using the training set of labeled images. During training, the network adjusts its weights and biases using optimization algorithms such as gradient descent and backpropagation to minimize the difference between the predicted and actual labels.

- Evaluation: After training, the performance of the neural network is evaluated using the test set of labeled images. The accuracy of the network in correctly classifying the digits in the test set provides insight into its generalization ability.

The MNIST dataset has been instrumental in advancing the field of deep learning, serving as a standard benchmark for comparing the performance of different neural network architectures and training techniques. It has been used extensively in research and education to demonstrate the effectiveness of ANNs for image classification tasks.